EU AI ACT: A comprehensive overview of the new AI regulation framework

21 Νοεμβρίου 2024

EU AI ACT: A comprehensive overview of the new AI regulation framework

EU AI ACT: A comprehensive overview of the new AI regulation framework

EU AI ACT: A comprehensive overview of the new AI regulation framework

EU AI ACT: A comprehensive overview of the new AI regulation framework

EU AI ACT: A comprehensive overview of the new AI regulation framework

Konstantia Moirogiorgou, Head of R&D, Seven Red Lines

In April 2021, the European Commission proposed the first EU regulatory framework for Artificial Intelligence (AI). On 9 December 2023, the European Council and Parliament reached a provisional political agreement on the AI ACT and on 1 August 2024, AI ACT entered into force.

The law aims to harmonize rules on artificial intelligence and follows a ‘risk-based’ approach: the higher the risk, the stricter the rules. Actually, AI ACT is part of the EU’s comprehensive digital strategy to manage the development and application of AI technologies. The European Artificial Intelligence Board includes representatives from each EU Member State and is responsible for monitoring the application and enforcement of the regulation across the EU.

This Regulation provides, among others, clear definitions for:

‘AI system’: a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment;

‘Risk’: the combination of the probability of an occurrence of harm and the severity of that harm;

‘Reasonably foreseeable misuse’: the use of an AI system in a way that is not in accordance with its intended purpose;

‘Notifying authority’: the national authority responsible for setting up and carrying out the necessary procedures for the assessment, designation and notification of conformity assessment bodies and for their monitoring;

‘Serious incident’: an incident or malfunctioning of an AI system that directly or indirectly affects person’s health, management or operation of critical infrastructure, fundamental rights, property or the environment.

In April 2021, the European Commission proposed the first EU regulatory framework for Artificial Intelligence (AI). On 9 December 2023, the European Council and Parliament reached a provisional political agreement on the AI ACT and on 1 August 2024, AI ACT entered into force.

The law aims to harmonize rules on artificial intelligence and follows a ‘risk-based’ approach: the higher the risk, the stricter the rules. Actually, AI ACT is part of the EU’s comprehensive digital strategy to manage the development and application of AI technologies. The European Artificial Intelligence Board includes representatives from each EU Member State and is responsible for monitoring the application and enforcement of the regulation across the EU.

This Regulation provides, among others, clear definitions for:

‘AI system’: a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment;

‘Risk’: the combination of the probability of an occurrence of harm and the severity of that harm;

‘Reasonably foreseeable misuse’: the use of an AI system in a way that is not in accordance with its intended purpose;

‘Notifying authority’: the national authority responsible for setting up and carrying out the necessary procedures for the assessment, designation and notification of conformity assessment bodies and for their monitoring;

‘Serious incident’: an incident or malfunctioning of an AI system that directly or indirectly affects person’s health, management or operation of critical infrastructure, fundamental rights, property or the environment.

In April 2021, the European Commission proposed the first EU regulatory framework for Artificial Intelligence (AI). On 9 December 2023, the European Council and Parliament reached a provisional political agreement on the AI ACT and on 1 August 2024, AI ACT entered into force.

The law aims to harmonize rules on artificial intelligence and follows a ‘risk-based’ approach: the higher the risk, the stricter the rules. Actually, AI ACT is part of the EU’s comprehensive digital strategy to manage the development and application of AI technologies. The European Artificial Intelligence Board includes representatives from each EU Member State and is responsible for monitoring the application and enforcement of the regulation across the EU.

This Regulation provides, among others, clear definitions for:

‘AI system’: a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment;

‘Risk’: the combination of the probability of an occurrence of harm and the severity of that harm;

‘Reasonably foreseeable misuse’: the use of an AI system in a way that is not in accordance with its intended purpose;

‘Notifying authority’: the national authority responsible for setting up and carrying out the necessary procedures for the assessment, designation and notification of conformity assessment bodies and for their monitoring;

‘Serious incident’: an incident or malfunctioning of an AI system that directly or indirectly affects person’s health, management or operation of critical infrastructure, fundamental rights, property or the environment.

It applies to providers, deployers, importers and distributors of AI systems located inside or outside of the EU, in case the output produced by their systems is intended to be used in the EU. It is important to note that it does not apply to any research, testing or development activity regarding AI systems or AI models prior to their being placed on the market or put into service.

The AI ACT classifies AI systems based on the level of risk they present. It identifies four levels of risk:

Unacceptable: systems considered a threat to people including Cognitive behavioral manipulation of people or specific vulnerable groups, Social scoring, Biometric identification and categorization of people and Real-time and remote biometric identification systems, such as facial recognition.

High: systems that negatively affect safety or fundamental rights.

Limited: refers to the risks associated with lack of transparency in AI usage.

It applies to providers, deployers, importers and distributors of AI systems located inside or outside of the EU, in case the output produced by their systems is intended to be used in the EU. It is important to note that it does not apply to any research, testing or development activity regarding AI systems or AI models prior to their being placed on the market or put into service.

The AI ACT classifies AI systems based on the level of risk they present. It identifies four levels of risk:

Unacceptable: systems considered a threat to people including Cognitive behavioral manipulation of people or specific vulnerable groups, Social scoring, Biometric identification and categorization of people and Real-time and remote biometric identification systems, such as facial recognition.

High: systems that negatively affect safety or fundamental rights.

Limited: refers to the risks associated with lack of transparency in AI usage.

It applies to providers, deployers, importers and distributors of AI systems located inside or outside of the EU, in case the output produced by their systems is intended to be used in the EU. It is important to note that it does not apply to any research, testing or development activity regarding AI systems or AI models prior to their being placed on the market or put into service.

The AI ACT classifies AI systems based on the level of risk they present. It identifies four levels of risk:

Unacceptable: systems considered a threat to people including Cognitive behavioral manipulation of people or specific vulnerable groups, Social scoring, Biometric identification and categorization of people and Real-time and remote biometric identification systems, such as facial recognition.

High: systems that negatively affect safety or fundamental rights.

Limited: refers to the risks associated with lack of transparency in AI usage.

General purpose AI systems are defined as the AI systems that have a wide range of possible uses, both intended and unintended by the developers. They can be applied to many different tasks in various fields, often without substantial modification and fine-tuning. These systems are sometimes referred to as 'foundation models' and are characterized by their widespread use as pre-trained models for other, more specialized AI systems. For example, a single general purpose AI system for language processing can be used as the foundation for several hundred applied models (e.g. chatbots, ad generation, decision assistants, spambots, translation, etc.), some of which can then be further fine-tuned into a number of applications tailored to the customer. Of course, they are not limited to a single type of information input.

They can process audio, video, textual and physical data (e.g. Chinchilla, Codex, DALL•E 2, Gopher, GPT-3, etc.).

Moreover, the AI ACT regulation requires all parts involved with the development of AI technologies to use training, validation and testing data sets relevant and sufficiently representative to the purpose of the AI system, free of errors and complete in view of the intended purpose. It is considered necessary to ensure human oversight rather than automated processes in order to prevent bias, profiling or dangerous and harmful outcomes.

The EU AI ACT will not be enforced until 2025, allowing companies to adjust and prepare. Before then, companies will be urged to follow the rules in 2024 voluntarily, but there are no penalties if they don’t. However, when it is eventually implemented, the penalties for non-compliance with the AI ACT are significant; if the offender is an enterprise, they range from 1% to 7% of its total worldwide annual turnover for the preceding financial year.

AI ACT is the first comprehensive regulatory framework for the development and/or use of artificial intelligence (AI) in the European Union (EU), allowing companies to innovate in a legislated and safe environment. The law aims to offer start-ups and small and medium-sized enterprises opportunities to develop and train AI models before their release to the general public.

General purpose AI systems are defined as the AI systems that have a wide range of possible uses, both intended and unintended by the developers. They can be applied to many different tasks in various fields, often without substantial modification and fine-tuning. These systems are sometimes referred to as 'foundation models' and are characterized by their widespread use as pre-trained models for other, more specialized AI systems. For example, a single general purpose AI system for language processing can be used as the foundation for several hundred applied models (e.g. chatbots, ad generation, decision assistants, spambots, translation, etc.), some of which can then be further fine-tuned into a number of applications tailored to the customer. Of course, they are not limited to a single type of information input.

They can process audio, video, textual and physical data (e.g. Chinchilla, Codex, DALL•E 2, Gopher, GPT-3, etc.).

Moreover, the AI ACT regulation requires all parts involved with the development of AI technologies to use training, validation and testing data sets relevant and sufficiently representative to the purpose of the AI system, free of errors and complete in view of the intended purpose. It is considered necessary to ensure human oversight rather than automated processes in order to prevent bias, profiling or dangerous and harmful outcomes.

The EU AI ACT will not be enforced until 2025, allowing companies to adjust and prepare. Before then, companies will be urged to follow the rules in 2024 voluntarily, but there are no penalties if they don’t. However, when it is eventually implemented, the penalties for non-compliance with the AI ACT are significant; if the offender is an enterprise, they range from 1% to 7% of its total worldwide annual turnover for the preceding financial year.

AI ACT is the first comprehensive regulatory framework for the development and/or use of artificial intelligence (AI) in the European Union (EU), allowing companies to innovate in a legislated and safe environment. The law aims to offer start-ups and small and medium-sized enterprises opportunities to develop and train AI models before their release to the general public.

General purpose AI systems are defined as the AI systems that have a wide range of possible uses, both intended and unintended by the developers. They can be applied to many different tasks in various fields, often without substantial modification and fine-tuning. These systems are sometimes referred to as 'foundation models' and are characterized by their widespread use as pre-trained models for other, more specialized AI systems. For example, a single general purpose AI system for language processing can be used as the foundation for several hundred applied models (e.g. chatbots, ad generation, decision assistants, spambots, translation, etc.), some of which can then be further fine-tuned into a number of applications tailored to the customer. Of course, they are not limited to a single type of information input.

They can process audio, video, textual and physical data (e.g. Chinchilla, Codex, DALL•E 2, Gopher, GPT-3, etc.).

Moreover, the AI ACT regulation requires all parts involved with the development of AI technologies to use training, validation and testing data sets relevant and sufficiently representative to the purpose of the AI system, free of errors and complete in view of the intended purpose. It is considered necessary to ensure human oversight rather than automated processes in order to prevent bias, profiling or dangerous and harmful outcomes.

The EU AI ACT will not be enforced until 2025, allowing companies to adjust and prepare. Before then, companies will be urged to follow the rules in 2024 voluntarily, but there are no penalties if they don’t. However, when it is eventually implemented, the penalties for non-compliance with the AI ACT are significant; if the offender is an enterprise, they range from 1% to 7% of its total worldwide annual turnover for the preceding financial year.

AI ACT is the first comprehensive regulatory framework for the development and/or use of artificial intelligence (AI) in the European Union (EU), allowing companies to innovate in a legislated and safe environment. The law aims to offer start-ups and small and medium-sized enterprises opportunities to develop and train AI models before their release to the general public.

Διαβάστε επίσης...

15 Απρ 2025

Causal AI in business risk management – the Insurance paradigm

15 Απρ 2025

Causal AI in business risk management – the Insurance paradigm

15 Απρ 2025

Causal AI in business risk management – the Insurance paradigm

16 Οκτ 2024

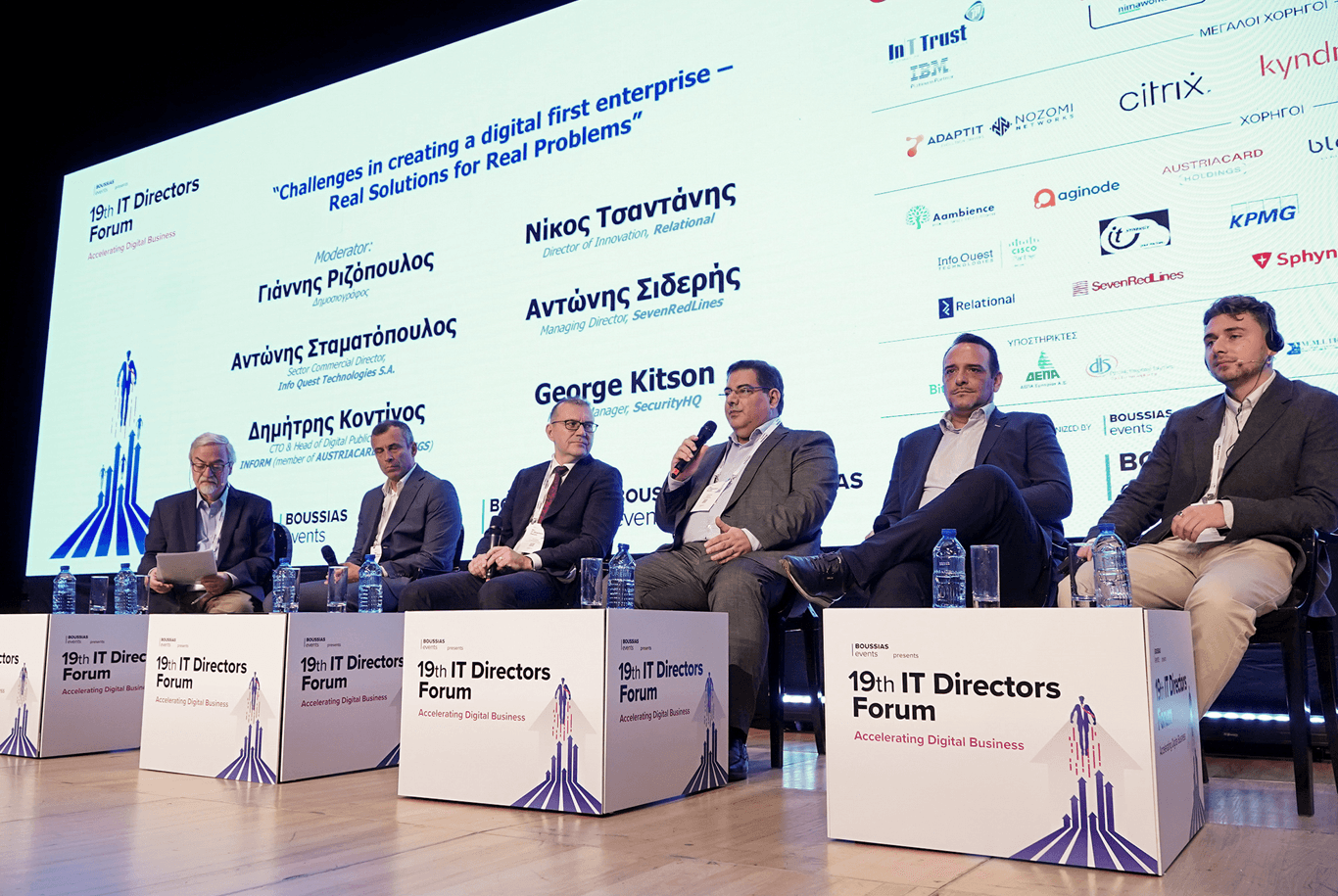

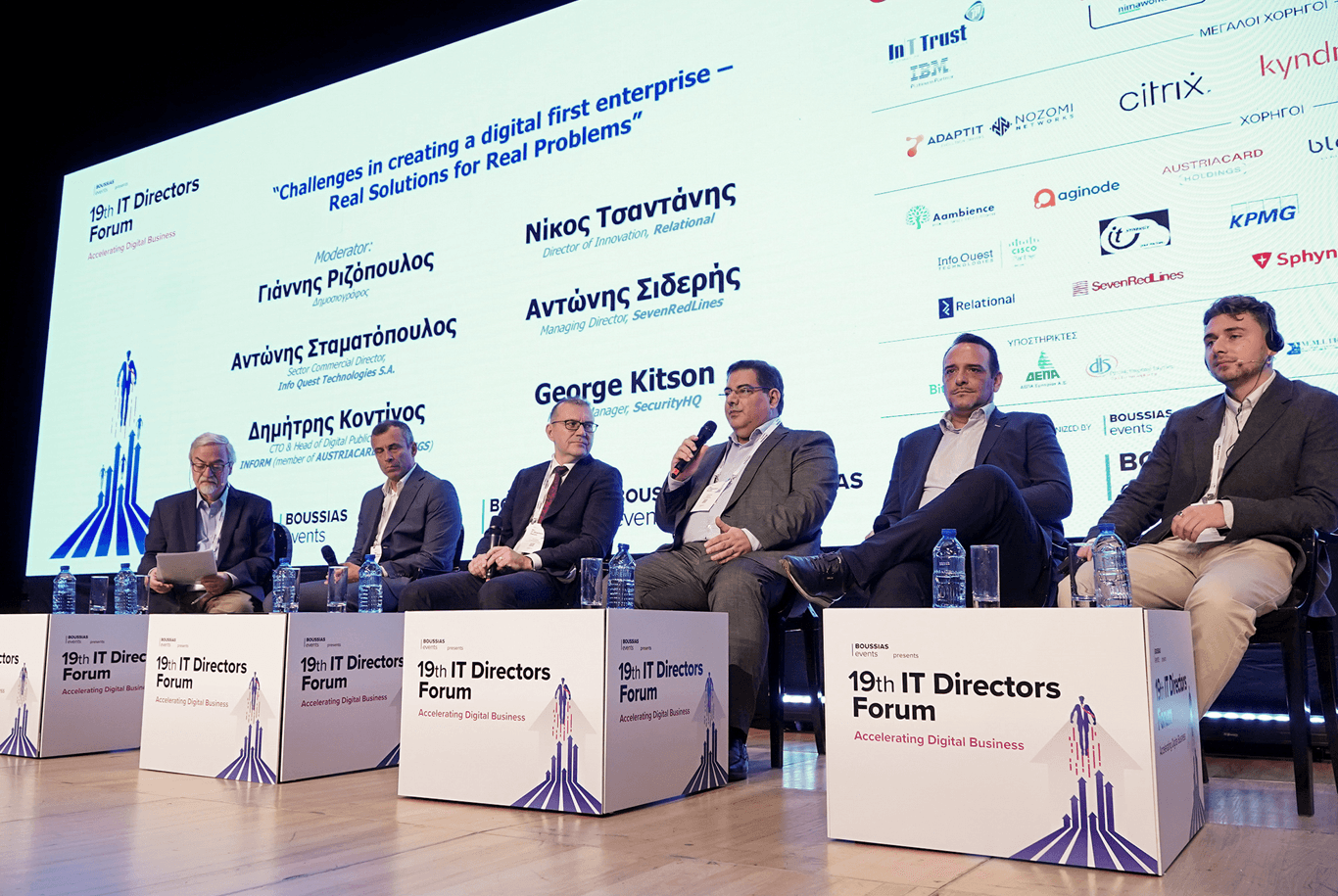

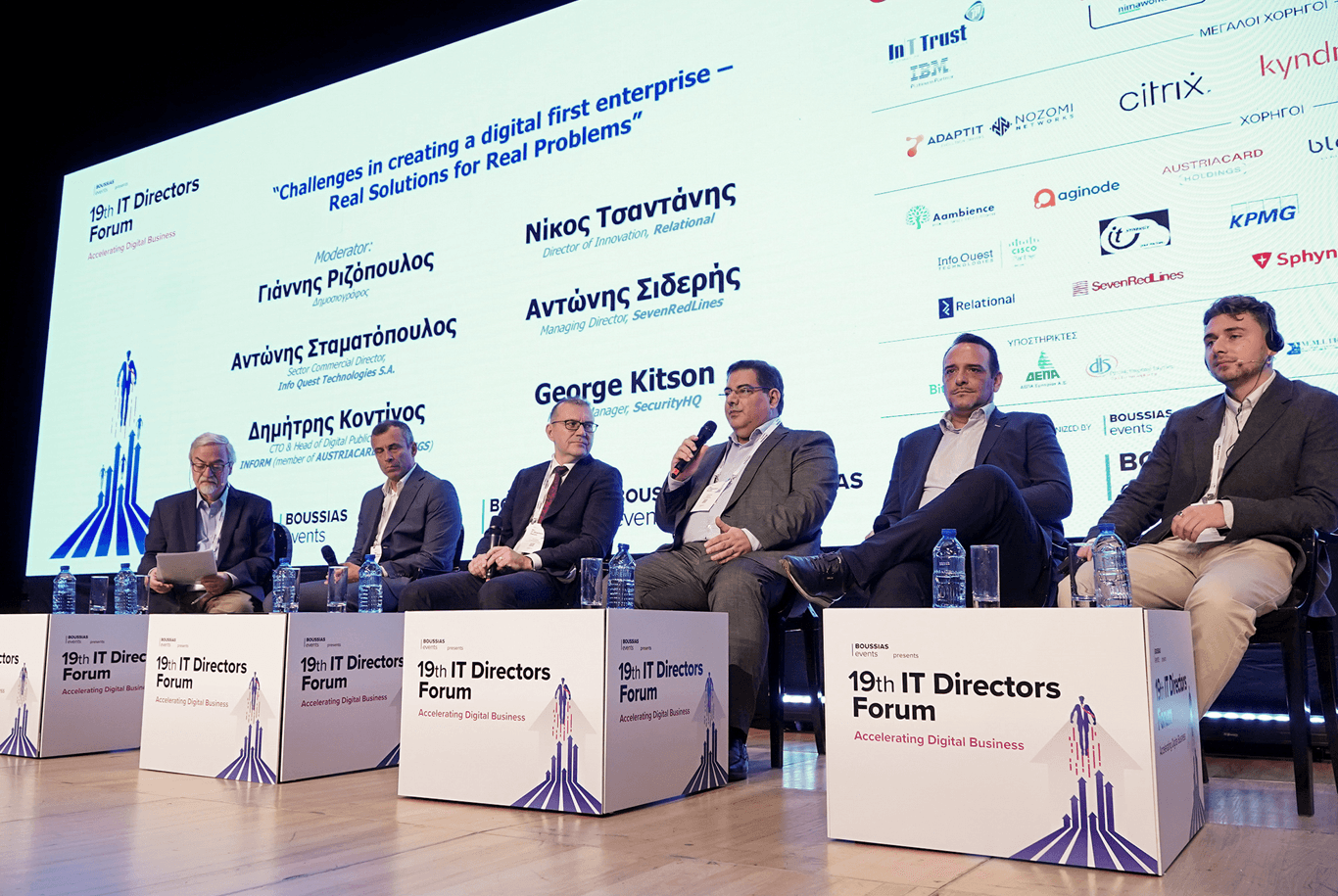

Η Seven Red Lines χορηγός στο 19ο IT Directors Forum

16 Οκτ 2024

Η Seven Red Lines χορηγός στο 19ο IT Directors Forum

16 Οκτ 2024

Η Seven Red Lines χορηγός στο 19ο IT Directors Forum

9 Οκτ 2024

Ανάλυση ιατρικών δεδομένων: Παρουσίαση εργασίας στο 2ο Πανελλήνιο Συνέδριο Ιατρικής Φυσικής

9 Οκτ 2024

Ανάλυση ιατρικών δεδομένων: Παρουσίαση εργασίας στο 2ο Πανελλήνιο Συνέδριο Ιατρικής Φυσικής

9 Οκτ 2024